Hello, my loyal band of cyber sentinels. Today, we’re diving into a topic that’s causing quite the stir in the cybersecurity world: the recent CrowdStrike fiasco, or as I like to call it, Y2CrowdStrike. Now, the Grump CISO typically avoids spotlighting specific products, but this incident is just too significant to ignore.

Imagine our digital kingdom, fortified by state-of-the-art defenses, suddenly destabilized by a single update gone wrong. Businesses around the globe were thrown into chaos, security teams found themselves in a frantic scramble, and the ripple effects were felt far and wide. Now, let’s clear one thing up right away—this wasn’t a breach. There were no shadowy figures lurking in the system, no stolen data being peddled on the dark web. No, this was a case of a tool gone wrong, a hiccup in the very software we trust to protect us. It’s like having your trusty sword suddenly turn into a feather duster in the heat of battle.

This issue didn’t just ruffle a few feathers—it sent shockwaves through our digital realms, reminding us just how vulnerable our interconnected world can be. It wasn’t the usual suspects of hackers and malicious actors this time, but an internal glitch that had everyone’s heads spinning. So, strap in, grab your beverage of choice, and join me as we navigate through the chaos, uncover what went wrong, and explore how we can shield our digital fortresses from future calamities. Welcome to the saga of Y2CrowdStrike, where we turn disaster into a learning opportunity and maybe have a good laugh now that it’s over. Let’s dive in!

How does CrowdStrike Work

Imagine you’re the ruler of a sprawling digital kingdom. Your fortress, filled with sensitive data and priceless information, is perpetually under threat from unseen forces. Enter CrowdStrike, your loyal knight armed with the Falcon platform—a cloud-native, AI-driven sentinel designed to protect your realm.

CrowdStrike Falcon acts as a magical shield, extending its protective aura throughout your entire kingdom, from the highest towers to the farthest outposts. Unlike cumbersome armors of old, Falcon’s lightweight agent glides through your digital landscape, gathering intelligence without hindering performance. It’s always there, vigilant and unobtrusive, ensuring that every corner of your kingdom is monitored in real-time.

Falcon deploys advanced AI and machine learning, acting like vigilant sentries, constantly analyzing behaviors and patterns within your realm. These sentries don’t just hunt known foes; they learn to recognize new threats by their suspicious actions. They never rest, growing smarter and more efficient with every byte of data they process. But to be effective, this AI needs to be taught what to search for, and this is where the issue emerged.

Adding to Falcon’s prowess is the Threat Graph, a vast, cloud-based oracle that aggregates wisdom from millions of other realms. This oracle offers unparalleled insights, predicting potential threats and advising on defensive measures. It’s like having a council of seers who provide context and intelligence, enabling your defenses to be proactive rather than reactive.

When a threat is detected, there’s no time for hesitation. Falcon’s automated systems are like quick-draw knights, instantly neutralizing dangers. Whether isolating an infected system, terminating a malicious process, or blocking a rogue network connection, these knights swiftly contain the threat, minimizing potential damage.

Once the battle subsides, Falcon transforms into a meticulous historian, documenting every detail of the skirmish. This detailed forensic analysis helps you understand the attack’s timeline, methods, and entry points, providing invaluable lessons to fortify your defenses. It’s like having a chronicler who ensures that history doesn’t repeat itself.

CrowdStrike Channel Files: Our Broken Link

Imagine CrowdStrike Channel Files as the essential communication network of your digital kingdom’s defense. These files enable your security team, armed with the Falcon platform, to share critical intelligence and coordinate efforts seamlessly, keeping defenses one step ahead.

The Falcon sensor is a formidable guardian, equipped with AI and machine learning models that continuously evolve. This evolution is powered by telemetry from the sensors and enriched by insights from Falcon Adversary OverWatch, Falcon Complete, and CrowdStrike threat detection engineers. This combination allows the sensor to identify and neutralize advanced threats.

Security telemetry starts as data filtered and aggregated on each sensor into a local graph store, correlating this context with live system activity. The Sensor Detection Engine combines built-in Sensor Content with Rapid Response Content from the cloud, which includes behavioral heuristics to detect adversary behavior. This content is delivered through Channel Files and interpreted by the sensor’s Content Interpreter using a regular-expression-based engine.

Each Rapid Response Content file is linked to a specific Template Type in a sensor release, providing the Content Interpreter with data and context to match against the Rapid Response Content. This ensures precise, real-time threat detection and response.

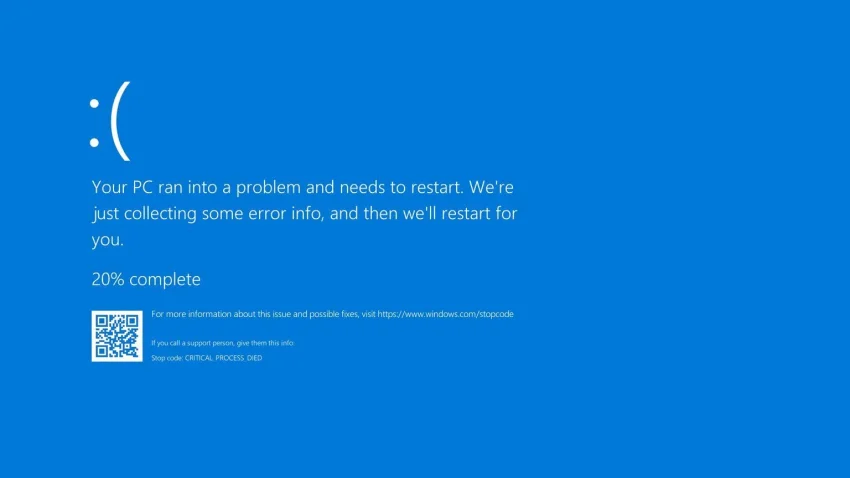

It was one of these Channel Files that caused the issues. The Falcon Sensor was configured to evaluate 20 parameters in Windows Kernel communications. However, a new IPC Template Instance required inspection of a 21st parameter. The Content Validator tested this new instance, expecting the sensor to handle 20 inputs. When the Rapid Response Content File was released, it instructed the sensor to evaluate 21 values, causing an out-of-bounds memory error. This led to the CSAgent.sys crashing and systems entering a boot loop.

How Could This Happen in Our Digital Fortress?

The failure here stems from CrowdStrike’s testing process for their Channel Files. Several key issues were identified:

1. IPC Template Type Mismatch

The Falcon Sensor was configured for 20 inputs, but a new Template Instance required 21. This discrepancy wasn’t caught during development or testing, leading to an out-of-bounds memory error when the sensor tried to evaluate the 21st input.

2. Missing Runtime Bounds Checks

The Content Interpreter lacked runtime checks for input fields. When the Rapid Response Content for Channel File 291 instructed it to read the 21st input, it caused a system crash.

3. Inadequate Template Type Testing

Testing focused on a static set of scenarios, missing the 21st input issue. Automated and manual tests didn’t cover all matching criteria, allowing the mismatch to go unnoticed.

4. Content Validator Logic Error

The Content Validator assumed the IPC Template Type would always provide 21 inputs, leading to the creation of problematic Template Instances.

5. Stress Testing Shortcomings

Stress tests did not account for the incorrect number of inputs, missing the potential for system crashes.

It appears that the Channel File was pushed to everyone simultaneously. It would have been wise to test the changes in a controlled environment first, much like training a knight in an arena before sending him into battle. This would have clearly shown there was an issue.

What do we do now?

Obviously, this was a significant issue that impacted not just IT departments, but entire organizations. So, what do we do moving forward? Most CrowdStrike users likely had their environments configured according to best practices, yet still faced downtime. This incident underscores how critically interconnected our digital fortresses are and how much we rely on our information security tools. When these tools fail, the impact on our environments can be profound, highlighting the need for robust testing and contingency planning. It’s easy to blame CrowdStrike but remember all the bad patches Microsoft has released that have impacted workstations and servers. This isn’t just about one company; it’s about the broader challenge of ensuring our security tools are reliable and resilient.

Moving forward, we must:

1. Enhance Testing Protocols: CrowdStrike and similar platforms must ensure comprehensive testing of all configurations and updates in controlled environments before deployment. This includes rigorous stress testing, bounds checking, and validation against real-world scenarios to identify potential faults.

2. Implement Redundancy and Failover Mechanisms: Organizations should develop and maintain robust redundancy and failover systems to minimize downtime in case of security tool failures. This can involve backup systems, alternative tools, and clear incident response plans.

3. Regular Audits and Updates: Continuous auditing of security configurations and regular updates based on the latest threat intelligence can help mitigate risks. Ensuring that all components of the digital fortress are up-to-date and functioning as expected is crucial.

4. Enhanced Communication and Transparency: Vendors should maintain open lines of communication with their clients, providing timely updates on potential issues and fixes. Transparency about the root causes of failures and the steps taken to address them builds trust and helps organizations better prepare for future incidents.

This incident serves as a wake-up call, emphasizing the importance of preparedness and the need for a proactive approach to cybersecurity. By learning from these events, we can strengthen our defenses and better protect our digital environments from similar disruptions in the future.

Thanks, Jason for a well written explanation of what really happened during this incident.